All men need laws to govern themselves, or they are in danger of leading nasty, brutish, and short lives. Robots, on the other hand, need no such thing. But alas, we are mere mortals so here are some rules that we hold each other to.

Face your fears: a robot so advanced, so intelligent, so fearsome, that it can easily collapse nations and rip families apart at their seams.

We spend a lot of time with each other. We even count the seconds! This is a log of every magical moment.

esk95

mys29

jjh353

rpc222

flk26

kl645

"Cause the players gonna play, play, play, play, play. And the haters gonna hate, hate, hate, hate, hate. - Taylor Swift

The goal of this project was to build an intelligent physical system that can perceive, reason about, and act upon its environment. The system took the form of a maze-mapping robot that can:

Details of the maze specifications and final competition guidelines can be found here on the course webpage.

Thus, our robot met all the criteria of an intelligent physical system.

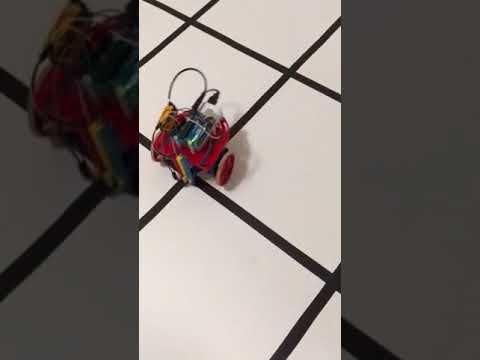

Here's a video of our robot completing a full sucessful run of a maze!

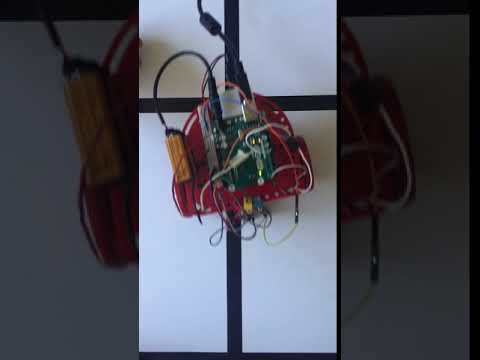

Here's some pictures of our completed robot! All the various parts have been labeled.

Our robot was approximately 16 cm tall, 16 cm wide (20 cm if you include the width added by the treasure sensors), and 19 cm long. Our chassis, wheels, ball caster, and all mounts were the default designs provided to us at the start of the semester (the cad and .stl files necessary to make them are available on the course/TA GitHub). We used the standard servos made available to us (Parallax Continuous Rotation Servos). We added rubber tires to the wheels to increase traction. We spent the majority of time working on the electronic components of the project.

We had two separate power sources for our robot (united only by a common ground). A 9V battery powered our Arduino. We mounted it using velcro to some free real estate on our chassis. The Arduino 5V and 3V pins were then used to power much of the circuitry. All the sensors mounted on the PCB and protoboard were powered off of these pins (IR sensors, their corresponding amplifiers, microphone, and the Schmitt trigger). These pins can source at least a few hundred mA of current, and by our calculations we drew much less than that (cumulatively less than 20mA). We also powered our system off of a 5V power bank. We temporarily placed the power bank underneath the Arduino (raised on makeshift stilts). We built around it, and this became its final position on the robot. A USB cable fed the power to two power rails positioned on either side of the protoboard. While we did have header pins soldered onto these power rails, they proved to be an extremely ineffective variety that led to many loose wires and much frustration. We bypassed these headers by simply soldering the power lines of the line sensors, servos, and wall sensors. While this removed much of our ability to make changes to the robot, we did this at a very late stage in the process, so it did not end up being a problem. Rather, because there were two power rails, it allowed us to clean up wiring significantly.

The physical pin assignments for the Arduino Uno were as follows:

As one can see from the images above, we had two additional boards for circuitry - a protoboard and a PCB. The protoboard had three 'systems' on it. It had a Schmitt Trigger, which converted the analog input from the junction line sensor into a digital signal (see the Milestone 4 page for a full description of the circuit). It also had a manual start button, which simply connected 3.3V to an input pin (with a resistor in series, of course) creating an active high button we could poll. Finally, it had screw terminals for the 5V power bank to connect to. These fed into a set of power rails on either side.

The PCB (printed circuit board) contained the circuitry for the IR sensor amplification (so that we could accurately detect treasures) and the microphone output circuit. The microphone output circuit is explained in lab 2 and the IR circuit in Milestone 2. We put two versions of the IR circuit on the board, so that we could have two treasure detection systems running at the same time, on either side of the robot. We were motivated to put these circuits on a PCB because we feared that they would be too fragile on a breadboard, and too difficult to build on a protoboard. The PCB ended up being extremely successful, and ensured the operation of all systems on it. The GitHub contains a folder which holds all relevant schematic files.

The following diagrams illustrate our high-level algorithm for controlling the robot.

First, the robot waits for a start signal, either from the 660 Hz tone, or the manual start button:

Then, the robot began to move through and map the maze according to the following algorithm:

Our final robot employed 3 sensors for line following and 1 sensor for junction detection. All 4 sensors were used in conjunction to ensure our robot could make proper turns. Our movement code, from line following to turning, ended up being something we worked to perfect over the course of the entire semester. When we began integrating turns into larger code like the DFS algorithm, we found ourselves having to continuously tune our movement code. Ultimately our line following code was exceptionally robust and was able to handle any mistakes in the robots movements and our turn code with the new junction detector was very reliable. We were very happy with our results.

Our team decided to purchase a Polulu QTR-3RC Reflectance Sensor Array to aid in line following. Initially, we found our servos to be very tempermental. The right servo was far more sensitive to small changes in it's value than the left and the servos didn't always increase/decrease speed as we expected them to. This caused a lot of issues in our attempts at error correction through PID. Once we had played around with the servos enough to gain a better understanding of their quirks, we were able to have some semblance of control. However, our line sensors were still unreliable and the readings were very inconsistent. To alleviate some of our issues we decided to puchase the sensor array. This provided many benefits:

The sensor array turned out to be exceptionally useful. We employed the use of the calibration method to ensure that our reading were always consistent and scaled appropriately from 0 to 1000 from white to black. We included a calibration in our final design such that every time we reset the robot, we would perform a 2 second calibration where we move all 3 sensors across the white posterboard and black tape so they know the full range of their readings. This greatly improved our line detection reliability. We also used a built-in method which returns the location of a black line relative to all 3 sensors. For example, with 3 sensors, our line location value can range from 0-2000 where 1000 means the black line in centered amongst the 3 sensors and the closer to 0 or 2000 the more left or right the line is respectively. We then implemented a PD control algorithm, calculating our error by comparing the current location of our line to the ideal value of 1000. We tried a variety of Kp and Kd values on an attempt to optimize our line-following correction. Ultimately, we realized our 2 servos required different error adjustment since their sensitivity levels varied so much. We increased all error adjustments on our left servo by a factor of 1.5 relative to the right servo and our line detection and following worked great! As a team that was behind on line following from milestone 1, this success was a great step in the right direction and our robust line-following ability ended up being one of our best strengths in our final implementation.

At first, we thought we could implement junction detection and turns with our 3 line following sensors alone. While this is definitely plausible, the location of our line sensors made it difficult. The way our chassis was designed, our 3 line sensors were attached to the front of our robot. When we tried to implement turns with this setup, we could successfully turn the robot, however, because our robot detects junction that is directly in front of it, we couldn't ensure that the center of our robot would stay centered on the junction while turning. When we had to implement treasure detection so that the treasure sensors would line up exactly at the junction because of their limited range, this posed an issue. We considered 2 solutions:

We decided to add a 4th line sensor, a junction sensor, along the axis of roation underneath our bot. Seeing as how we still didn't have enough analog pins, we implemented a schmitt trigger for this line sensor. An oscilloscope screen depicting our functioning schmitt trigger is pictured below:

Once we had a functional junction sensor, we were able to improve our turns. Very simply put, our turns work by first detecting a junction on our junction sensor, so the axis of rotation of our bot was centered on the juntion. When the turn method is subsequently called (as determined by the high level DFS depending on the walls, etc.), the motor speeds are set to pre-determined Left and Right turn speeds. Determining appropriate turning speeds was another task which took a long time because of our finicky servos. They didnt't quite work as we expected but after much trial and error we found satisfactory values that implemented almost perfect turns so that wheel speeds were equal and opposite and the robot appeared to spin in place. Once the robot was turning in the correct direction, we implemented a small delay to ensure that all the line sensors would move past the initial straight line they were following. Then we would continue to spin the robot until the line sensors once again found a line, meaning the middle sensor saw black while the left and right sensors saw white. This meant that the robot had turned 90 degrees and had competed it's turn.

At the completion of a turn, we would also ensure that the juncion sensor was on black, which made sure that our robot was still centered on the junction. For example, occasionally our left turns would end up with the center of our robot slightly behind the junction, so the treasure sensors were not correctly aligned. This check would move the robot slightly forward until the junction sensor was on a junction. Overall on our turns, we had to find a good threshold for black vs white when trying to stop the robot from turning once it had found a line. We found if we were too specific in our requirements, the robot wouldn't be able to satisfy them all and would instead keep turning. Once we found a good middle ground, our turns were very reliable! We implemented 180 degree turns by doing 2 subsequent left turns. After a turn, the orientation of our bot wasn't always perfect, however our robust line following was able to handle the crooked bot and correct it's orientation as it moved forward from a junction.

At the very top level of our final algorithm is wall detection. We started working with the distance sensors in Milestone two, first by collecting data from the long range and short range sensors. We wired the sensors up to a simple circuit and wrote basic code in Arduino to display the values when a wall was placed varying distances away from the sensors. After analyzing the data, we decided that because the long range sensors were a lot more unpredictable in determining how close a wall is within one half grid-space of the maze, we would use the short range sensors. We looked at the graphs we collected of the values from the sensors when the wall was placed 10 - 20 cm away, and decided that our threshold sensor value would be 100. That is to say, when the sensor outputted a number above 100, we would determine that there was a wall within one grid space of the robot. A graph of distance value outputted from the sesnor vs distance from one of our short-range sensors is shown below, along with the code that would drive the robot to determine whether or not there was a wall within one grid space of its right, front, or left sides.

if (distanceValue > 100) {

wall = true;

servoL.write(90);

servoR.write(90);

delay(500);

}

As our design grew more complicated, and we started to integrate the DFS algorithm for traversing the maze, we realized just how important accuracy of wall detection really needed to be. While watching the robot move through the grid, we noticed that sometimes the robot would not behave as we would expect, moving into places it had already been, ignoring main parts of the maze, and even running itself into walls. After noticiing these strange behaviors, we decided we needed to take a second look at wall detection. We collected more data from the robot, this time taking a careful look at each individual left, right, and middle sensors not simply as generic short-range distance sensors, but as individual pieces of hardware. From this closer look, we were able to determine 3 different sensitivity values for each sensor that would help us more accurately predict when a wall was to the front, left, or right of the robot. Once we added these updated values to our algorithm, our robot's movement through the maze significantly improved.

At the nucleus of our intelligent physical system is the depth-first-search (DFS) algorithm. The DFS is an algorithm for traversing nodes in some graph. The DFS controls the route the robot takes through the maze. At a very high level, the DFS essentially entails going as deep as possible, in terms of distance from the initial position, until it hits a dead end or cannot go to a new location. If it hits a dead end or cannot go to a new location, it will backtrack to the previous location. Here's a diagram that depicts how the DFS algorithm works:

The DFS cannot begin until the robot acquires information about its surroundings. Once this is done, the DFS begins by marking the current location in the maze array as explored. After this, the DFS algorithm removes the current location from the frontier set. This is done so that the robot doesn't end up confusing places it has already visited with places it hasn't already visited. After this step, the robot adds unvisited surroundings nodes to the frontier set. This is done so that the robot knows that there are regions of the maze that it has not yet visited, and are accessible. Next, the algorithm checks if the frontier set is empty. An empty frontier set indicates that the robot has visited all reachable cells. If the frontier set is indeed empty, it initiates the code performed when the maze navigation is complete. If the frontier is not empty (i.e. there are regions of the maze that the robot knows it can visit but hasn't visited yet), the robot will update its current position and visited stack. This step is at the crux of the algorithm. If the robot can move to a spot that it has never visited before, it will move to this spot and push its location to the visited stack. If all the spots it can go to have already been visited, it will pop from the visited stack and move to this position.

This algorithm guarantees that the robot will be able to traverse all reachable cells from the initial position in which it is placed. However, this algorithm makes no guaranetees as to the efficiency of the route taken.

In order to extract frequency-domain information from the microphone and phototransistor sensors, we took a Fourier transform of the time-domain signal we were reading from the sensors. We utilized the Fast Fourier Transform library to efficiently analyze the data. The FFT algorithm returns an array where each element refers to the magnitude of the frequency component in that specific frequency bin. Thus, we needed to check the correct bin according to the frequency we wanted to detect. We decided to use a 128-point FFT because it was faster and used much less memory than the 256-point FFT, while still differentiating between the frequencies we were detecting.

To detect a 660 Hz tone we used an electret microphone as pictured below:

By taking a FFT, we were able to distinguish the frequency content of the audio signal from the microphone. The following graph is a FFT of a 660 Hz tone.

Thus, to implement start detection, we just needed to check if bin 10 is above a certain threshold. However, this simple implementation caused our robot to false start if it heard any signal with a large enough ~660 Hz component. To account for this, we took 30 FFTs in a row, and only start the robot if 20 of the 30 FFTs returned a magnitude above the threshold. While this helped make our start detection more immune to outside noise, it was not completely flawless because we did have a false start during the final competition when there was loud cheering. To make our start detection less sensitive, we could have taken more than 30 FFT samples because the start tones generally lasted for a long period of time.

Our robot had to be equipped with the ability to accurately detect modulating IR frequencies emitted by "treasures" on the maze walls at junctions. In order to improve the accuracy of the detection, we essentially take 30 samples of the signal coming from the treasure detection circuit, and determine the treasure identity from a vote of all 30 samples. Here's a diagram that depicts this process.

The treasure detection querries the analog inputs and generates 30 FFTs and 30 frequency predictions for each input. Afterwards, the program votes on which frequency is dominant. In order for, say, the program to determine that a treasure is not next to it, the plurality of votes from both sensors must indicate that no treasure is nearby. For a 7/12/17 kHz frequency to be determined to be present, the plurality of votes from the left sensor must indicate a 7/12/17 kHz frequency and the plurality of votes from the right sensor must indicate there being no treasure/frequency, or vice-versa. If the vote yields conclusive results, then the robot detection code is terminated and the variable corresponding to the treasure frequency is set appropriately. If the vote is inconclusive (rarely happens), then the 30 samples are re-acquired and the vote is reconducted.

The actual treasure detection circuit essentially consists of a photoresistor in series with an operational amplifier in order to intensify the voltage modulations produced by the incident IR radiation. The exact specifications of the circuit are shown below. The circuit was fabricated on a PCB. We produced 2 replicas of the circuit to be used for both treasure sensors on the left/right side of the robot.

In order for the robot to talk to the base station, we had to write code in Arduino that would synethesize information pertaining to the robot's current location and surroundings and send it to the Arduino base station. Here is a diagram depicting the transmission protocol:

Our base station served as the "middle man" that would allow our robot to communicate to our display. We needed a way to encode and transmit data about the robot's position at any given time, as well as data about treasures, walls, and whether or not the robot was done with its navigation. On the Verilog/ FPGA side, we knew that we would need 2 bytes of data to fully encapsulate all the necessary components. We knew that we wouldn't be able to transmit all of this to the display at once, so we would need to break up data into two parts: one part would transmit data about the position of the robot, and the other part would transmit data about the walls and treasures. To implement this, we reserved two bits for indicating whether or not the byte was relaying information about the walls and treasures, or if it was relaying information about position. We also reserved two valid bits, that we could turn high and low incrememntally so the verilog would be able to update on the rising edge. All of the planning and consideration led us to our final two byte scheme, a diagram of which is shown below:

We realized that the robot did not necessarily need to send all 16 bits of this data. For example, the bits corresponding to indication and valid were components strictly relevant to the base-station and FPGA, but we wanted our bytes to remain consistent to avoid confusion, and we thought that the extra few bits of information would not take long to transmit from the robot to the base-station, so we decided to have our robot send all 16 bits of data, where those four bits were essentially don't cares.

Once the base station recieves the 16 bit word from the robot, it decodes it by converting into a string of length 16 with the same information. The code for this conversion is shown below:

String disassembleWord(word data) {

String stringToReturn;

for (int i = 0; i < 16; i++) {

int bitIndex = 15 - i;

stringToReturn += String(bitRead(data, bitIndex));

}

return stringToReturn;

}

After that, we were able to look at the values of individual characters in the string and write highs or lows to the corresponding outputs. The code block that writes information to the FPGA about walls and treasures is shown below:

digitalWrite(A5, LOW); // indication bit

if (dataString.charAt(9) == '1') {

// top wall

digitalWrite(A4, HIGH);

} else {

digitalWrite(A4, LOW);

}

if (dataString.charAt(10) == '1') { // left wall

digitalWrite(7, HIGH);

} else {

digitalWrite(7, LOW);

}

if (dataString.charAt(11) == '1') { // bottom wall

digitalWrite(6, HIGH);

} else {

digitalWrite(6, LOW);

}

if (dataString.charAt(12) == '1') { // right wall

digitalWrite(5, HIGH);

} else {

digitalWrite(5, LOW);

}

if (dataString.charAt(13) == '0' && dataString.charAt(14) == '1') {

digitalWrite(4, LOW);

digitalWrite(3, HIGH);

}

else if (dataString.charAt(13) == '1' && dataString.charAt(14) == '0') {

digitalWrite(4, HIGH);

digitalWrite(3, LOW);

}

else if (dataString.charAt(13) == '1' && dataString.charAt(14) == '1') {

digitalWrite(4, HIGH);

digitalWrite(3, HIGH);

} else {

digitalWrite(4, LOW);

digitalWrite(3, LOW);

}

These arduino outputs were wired to the FPGA, which looked at the indication bit, and updated the information about walls, treasures, and position. The code for this is shown below:

always @ (posedge valid) begin

preX = robotX;

preY = robotY;

if (arduinoInput[6] == 1'b1) begin

updateType = 1'b0;

robotX = arduinoInput[5:4];

robotY = arduinoInput[3:1];

done = arduinoInput[0];

end

if (arduinoInput[6] == 1'b0) begin

updateType = 1'b1;

walls = arduinoInput[5:2];

treasure = arduinoInput[1:0];

end

end The final FPGA display did not end up being much different than the implementation seen in Milestone 4. The same overall structure applies--black lines represent walls, light pink squares are explored spaces, white squares are unexplored, a smaller magenta square in the middle of a square represents the current location of our robot, a small red mark on a square indicates a 7 Hz treasure, a small green mark indicates a 12 Hz treasure, and a small blue mark indicates a 17 Hz treasure. At the end, when our robot is done exploring the maze (or all that it was capable of accessing), the robot icon is turned black and three tones play on the speakers. Any squares that are still white at that point represent unreachable areas on the map.

Our primary focus was providing better on-screen transitions so that the square that was supposed to symbolize our robot looked like it was smoothly traversing across the grid. At the beginning, because of the way our robot turns from junction-to-junction, two packets of information would convey the same location information back to the base-station, making the robot icon to blink in place on the screen. This made it a little harder to keep your eye on the robot, so we had the FPGA check that the new "current location" information was not the same as the previous location recorded in order to make sure that it wouldn't attempt to redraw (causing the blink) the robot in the same place.

As you can see in the image below, there were still some kinks in our FPGA code. If you look closely, the monitor displays a small offset where the simulated walls don't quite meet. We had fixed this bug at one point, but upon switching to another monitor, were incapable of reproducing the same product. Besides the slight rightward shift, the FPGA also had small glitches, that were annoying though not detrimental to the overall real-time mapping of the maze.

Our robot was remarkably successful in its operation. Despite missing the Milestone 4 requirements, our system was fully functional by the Friday before competition. The video above shows a complete run of the maze, with all functionality working, that was taken in lab several days before the competition. We were extremely pleased with how the robot turned out. Last minute testing revealed that our robot was fairly resilient.

Going into the competition, we had two known bugs. The first was that the wireless communication would occasionally drop a packet. As a result, very rarely (<1% of all transmissions), we would get an empty white square. This could have been prevented by waiting for confirmation message from the second Arduino, but this made our robot significantly slower. The second was that very rarely, a fake wall would be detected - i.e. - the robot would pass an open square, and detect and display a wall there. This was extremely problematic, because it could completely ruin a run of a maze. Both of these issues, while serious, did not happen with much frequency, so we decided to live with them and restart the robot if either happened in competition.

Competition day was extremely exciting and satisfying. Several minutes before our first round, a terrible accident had occurred. Our robot, sitting on a table, had detected a 660Hz tone playing from a speaker several meters away and started, crashing to the ground. A quick inspection determined that the damage was not insignificant: Our ball caster mount had broke near where it was mounted to the robot. We ran up to the lab to get a replacement, but it was not necessary. We retightened the screw, which ran through both pieces, and reinforced the joint with much duct tape. A quick test revealed that the system still worked. Our first run was fairly successful: We completed the maze in 90 seconds, with one false start due the cheering and whooping that occurred, and missing one treasure. The missed treasure deduction was later removed because the treasure was improperly placed. Check out the video below.

The second round was much more successful. In order to combat the false start problem, we raised the threshold for the start detection slightly (from 120 units to 140). We finished the maze in 100 seconds (the fastest in our heat!), detecting all treasures and walls successfully. We moved on to the final round!!! We were extremely nervous, because from our observations we were the fourth fastest robot in the final. However, one robot that was faster than ours went off line, and required a reset. This lead us to a third place finish!!!!! We also won the 'Best Team Spirit' Award!

We are extremely proud of our work, our robot, and our team. We'd like to thank Kirsten, Chris, and every TA that helped us over the course of the semester. Go Team One!

int sensorPin = A0;

int voltageValue = 0;

int delayTime = 500;

void setup() {

Serial.begin(9600);

}

void loop() {

voltageValue = analogRead(sensorPin);

Serial.println(voltageValue);

delay(delayTime);

}

int sensorPin = A0;

int outputPin = 11;

float voltageValue = 0;

void setup() {

pinMode(outputPin, OUTPUT);

Serial.begin(9600);

}

void loop() {

voltageValue = analogRead(sensorPin);

analogWrite(outputPin, 255 * (voltageValue-6)/1017);

Serial.println(voltageValue);

//Frequency of PWM is 490.2 Hz

}

#include ;

Servo servo;

int outputPin = 11;

int sensorPin = A0;

float voltageValue;<

void setup() {

// put your setup code here, to run once:<3>

Serial.begin(9600);

pinMode(outputPin, OUTPUT);

servo.attach(outputPin);

}

void loop() {

// put your main code here, to run repeatedly:

voltageValue = analogRead(sensorPin);

float servoSpeed = 180 * (voltageValue-1)/989;

Serial.println(servoSpeed);

servo.write(servoSpeed);

}

#include

Servo servo1;

Servo servo2;

// the setup function runs once when you press reset or power the board

void setup() {

Serial.begin(9600);

servo1.attach(9);

servo2.attach(11);

}

// the loop function runs over and over again forever

void loop() {

servo1.write(0);

servo2.write(180);

delay(5000);

}

Evan, Radhika and Michael

if (fft_log_out[47]>75){

digitalWrite(13, HIGH);

}

else if (fft_log_out[47]<75){

digitalWrite(13, LOW);

}

TJ, Frannie, and Katherine

//detects input on bin 5 and performs start function

if (fft_log_out[4] > 120) {

start();

}

//This function will "start" the robot and perform any functions necessary

for operation. Right now it turns on an LED.

//

void start() {

digitalWrite(13, HIGH);

}

//detects input on bin 19 and performs start function

if (fft_log_out[4] > 120) {

start();

}

Radhika, Katherine, and Evan

module GRID_SELECTOR(

CLOCK_50,

PIXEL_COORD_X,

PIXEL_COORD_Y,

GRID_X,

GRID_Y);

input CLOCK_50;

input wire [9:0] PIXEL_COORD_X;

input wire [9:0] PIXEL_COORD_Y;

output reg [3:0] GRID_X;

output reg [3:0] GRID_Y;

always @ (*) begin

GRID_X = PIXEL_COORD_X >>>7;

GRID_Y = PIXEL_COORD_Y >>>7

if (GRID_X>4'd1) begin

GRID_X = 4'd2;

end

if (GRID_Y>4'd1) begin

GRID_Y = 4'd2;

end

end

endmodule

reg[7:0] grid4[2:0] [2:0];

always @(*) begin

grid[0][0] = 8'd50;

grid[1][0] = 8'd100;

grid[0][1] = 8'd150;

grid[1][1] = 8'd200;

grid[2][0] = 8'd0;

grid[2][1] = 8'd0;

grid[2][2] = 8'd0;

grid[0][2] = 8'd0;

grid[1][2] = 8'd0;

end

int delayTime = 500; //ms;

void setup() {

pinMode(8, OUTPUT);

pinMode(9, OUTPUT);

}

void loop() {

for (int i = 0; i<4; i++){

if (i==0){

digitalWrite(9, LOW);

digitalWrite(8, LOW);

}

else if (i==1){

digitalWrite(9, LOW);

digitalWrite(8, HIGH);

}

else if (i==2){

digitalWrite(9, HIGH);

digitalWrite(8, LOW);

}

else{

digitalWrite(9, HIGH);

digitalWrite(8, HIGH);

}

delay(delayTime);

}

}

reg[7:0] grid1[2:0] [2:0];

always @(*) begin

grid1[0][0] = 8'b11111111;

grid1[1][0] = 8'd300;

grid1[0][1] = 8'd300;

grid1[1][1] = 8'd300;

grid1[2][0] = 8'd00;

grid1[2][1] = 8'd00;

grid1[2][2] = 8'd00;

grid1[0][2] = 8'd00;

grid1[1][2] = 8'd00;

end

reg[7:0] grid2[2:0] [2:0];

always @(*) begin

grid2[0][0] = 8'd300;

grid2[1][0] = 8'b11111111;

grid2[0][1] = 8'd300;

grid2[1][1] = 8'd300;

grid2[2][0] = 8'd0;

grid2[2][1] = 8'd0;

grid2[2][2] = 8'd0;

grid2[0][2] = 8'd0;

grid2[1][2] = 8'd0;

end

reg[7:0] grid3[2:0] [2:0];

always @(*) begin

grid3[0][0] = 8'd300;

grid3[1][0] = 8'd300;

grid3[0][1] = 8'b11111111;

grid3[1][1] = 8'd300;

grid3[2][0] = 8'd0;

grid3[2][1] = 8'd0;

grid3[2][2] = 8'd0;

grid3[0][2] = 8'd0;

grid3[1][2] = 8'd0;

end

reg[7:0] grid4[2:0] [2:0];

always @(*) begin

grid4[0][0] = 8'd300;

grid4[1][0] = 8'd300;

grid4[0][1] = 8'd300;

grid4[1][1] = 8'b11111111;

grid4[2][0] = 8'd0;

grid4[2][1] = 8'd0;

grid4[2][2] = 8'd0;

grid4[0][2] = 8'd0;

grid4[1][2] = 8'd0;

end

always @(*) begin

if (GPIO_0_D[33]==1'd0 && GPIO_0_D[31] == 1'd0) begin

PIXEL_COLOR = grid1[GRID_X][GRID_Y];

end

if (GPIO_0_D[33]==1'd0 && GPIO_0_D[31] == 1'd1) begin

PIXEL_COLOR = grid2[GRID_X][GRID_Y];

end

if (GPIO_0_D[33]==1'd1 && GPIO_0_D[31] == 1'd0) begin

PIXEL_COLOR = grid3[GRID_X][GRID_Y];

end

if (GPIO_0_D[33]==1'd1 && GPIO_0_D[31] == 1'd1) begin

PIXEL_COLOR = grid4[GRID_X][GRID_Y];

end

end

unexplored = 8'b11111111;

walls = 8'b0;

explored = 8'b11110111; //pink

bot = 8'b11100011; //magenta

treasures = 8'b11011100; //yellow

robot_position = 2'd20; // this is the starting grid number

//capture the past position before movement before updating

//the new robot position by marking it explored.

gridcolor[robot position] = explored;

if robot is moving up:

robot_Y -= 1;

if robot is moving down:

robot_Y += 1;

if robot is moving left:

robot_X -= 1;

if robot is moving right:

robot_X += 1;

update grid to show new robot position;

update grid to show previous robot position has been explored;

Michael, TJ, and Frannie

//localparam

localparam CLKDIVIDER_440 = 25000000 / 440 / 2;

//sound variables

reg square_440;

assign GPIO_0_D[1] = square_440;

reg [15:0] counter;

always @ (posedge CLOCK_25) begin

if (counter == 0) begin

counter <= CLKDIVIDER_440 - 1; //reset the clock

square_440 <= ~square_440; //toggle the square pulse

end

else begin

counter <= counter - 1;

square_440 <= square_440;

end

end

module sin_rom(

input [7:0] addr,

input clk,

output reg [7:0] q

);

// Declare the ROM variable

reg [7:0] sine[255:0];

initial

begin

sine[0]<= 8'd127;

sine[1]<= 8'd130;

/// everything in between...

sine[255]<= 8'd124;

end

always @ (posedge clk) begin

q <= sine[addr];

end

endmodule

localparam CLKDIVIDER_A_SIN = 25000000 / 400 / 256; //400 Hz

reg [15:0] counter;

always @ (posedge CLOCK_25) begin

if (counter == 0) begin

counter <= CLKDIVIDER_A_SIN - 1;

if (DAC >= 255) begin

DAC <= 0;

end

else begin

DAC <= DAC + 1;

end

end

else begin

counter <= counter - 1;

end

end

always @ (posedge CLOCK_25) begin

if (reset) begin

freq_state <= 2'b00;

freq_counter <= 25'b0;

end

if (freq_counter == ONE_SEC) begin

freq_counter <= 25'b0;

freq_state <= freq_state + 2'b1;

if (freq_state == 2'b11) begin

freq_state <= 2'b00;

end

end

else begin

freq_state <= freq_state;

freq_counter <= freq_counter + 25'b1;

end // always @ (posedge CLOCK_25)

end

localparam CLKDIVIDER_A_SIN = 25000000 / 400 / 256; //400 Hz

localparam CLKDIVIDER_B_SIN = 25000000 / 800 / 256; //800 Hz

localparam CLKDIVIDER_C_SIN = 25000000 / 600 / 256; //600 Hz

reg [15:0] counter;

always @ (posedge CLOCK_25) begin

if (counter == 0) begin

if (freq_state == 0) begin

counter <= CLKDIVIDER_A_SIN - 1;

end

if (freq_state == 1) begin

counter <= CLKDIVIDER_B_SIN - 1;

end

if (freq_state == 2) begin

counter <= CLKDIVIDER_C_SIN - 1;

end

if (DAC >= 255) begin

DAC <= 0;

end

else begin

DAC <= DAC + 1;

end

end

else begin

counter <= counter - 1;

end

end

Radhika, Katherine and Evan

For the radio subteam of this lab, our goal was to communicate information between two arduinos through radio communication using two transceivers. We wanted to send simulated maze data from one arduino, which was set up as the transmitter, to another arduino, which was setup as a reciever. We also wanted to observe the range of the radio communication with different power/speed settings and look how many packets were successfully transmitted.

As directed in the lab, first, we downloaded the RF24 arduino library. Then, to begin with simple tests of the transceivers, we used the 'Getting Started' code that was provided to us in the lab as opposed to the example code that came with the arduino library. Before we could begin, we had to adjust the identifier numbers for our two pipes using the formula 2(3D + N) + X where D is the day of our lab, N is our team number, and X was 0 and 1 for each of our 2 radios. Our calculated values happened to be 2 and 3, the same as the ones in the sample code, as shown below:

// Radio pipe addresses for the 2 nodes to communicate.

const uint64_t pipes[2] = { 0x0000000002LL, 0x0000000003LL };

We first tested the sample code that was provided to us. We set up one arduino to be the transmitter by typing 'T' into the serial monitor of that arduino. The other arduino automatically became a reciever. The sample code was written to sent the time at which the data was being sent to the reciever. If the data was successfully sent, the transmitter then waits on a confirmation from the reciever. If the data wasn't sent successfully, the code tries to send the data again in 1 second. The code has a 200ms timeout value, so if the receiver hasn't confirmed that it has recieved the data in 200 ms, the code prints out a corresponding statement. If the receiver does confirm before timeout, the calculated round-trip delay time is printed out. This is reflected in the following code:

unsigned long time = millis();

printf("Now sending %lu...",time);

bool ok = radio.write( &time, sizeof(unsigned long) );

if (ok)

printf("ok...");

else

printf("failed.\n\r");

radio.startListening();

// Wait here until we get a response, or timeout (200ms)

unsigned long started_waiting_at = millis();

bool timeout = false;

while ( ! radio.available() && ! timeout )

if (millis() - started_waiting_at > 200 )

timeout = true;

We tried different ranges for sending and receiving data. We didn't see a major drop in successful transmissions until we were almost in 2 separate rooms, so we believe the range of the radio communication is more than enough for our applications.

In the next steps, we modified the data we were sending between arduinos to reflect simulated maze data.

To send the full maze coordinates, we altered the code to send a 5x5 array of unsigned chars. When sending and receiving transmissions, the arduino needs to be told what size packet it will be sending or receiving, so the key is to explicitly state what the maze size was when reading and writing data.

unsigned char maze[5][5];

// different maze values were randomly assigned and sent

//sending

bool ok = radio.write( &maze, sizeof(unsigned char)*25);

//receiving

bool done = radio.read( &maze, sizeof(unsigned char)*25);

We noticed that when we increased the packet size, more packets of information were being dropped or taking too long to transmit, so we also played around with higher data rates and power levels. This yielded less dropped packets of information that transmitted faster, but we tried to err on the side of less power consumption.

// set the power

// RF24_PA_MIN=-18dBm, RF24_PA_LOW=-12dBm, RF24_PA_MED=-6dBM, and RF24_PA_HIGH=0dBm.

radio.setPALevel(RF24_PA_MED);

//RF24_250KBPS for 250kbs, RF24_1MBPS for 1Mbps, or RF24_2MBPS for 2Mbps

radio.setDataRate(RF24_2MBPS);

Here is a video of one arduino sending the entire maze to another:

When sending maze updates, one arduino sent a 1x3 array of unsigned chars--the first two chars being the maze coordinates, and the third being new information that corresponded with that data point. The second arduino had a 5x5 maze array initialized on it so that the correct coordinate could be updated to reflect the incoming information as it was received.

//random coordinate and data values were assigned

unsigned char updates[3];

updates[0] = x;

updates[1] = y;

updates[2] = data;

//sending individual maze updates

bool ok = radio.write( &updates, sizeof(unsigned char)*3);

//receiving and updating internal memory of maze

done = radio.read( &updates, sizeof(unsigned char)*3 );

maze[updates[0]][updates[1]]=updates[2];

Here is a video of the maze updates being sent from one Arduino to another:

For the final part of the lab, we had to create a system for sending maze information from the Arduino base station to the FPGA. Since the robot was not yet operational, and we had already demonstrated that we can communicate wirelessly between the Arduinos, we generated test robot coordinate data to simulate the robot traversing the maze. Since we were able to demonstrate communication between the Arduinos, and from the Arduino to the base station, it will be relatively straightforward to link all of these devices together. Note that we did not demonstrate the communication of treasure and wall data from the Arduino to the FPGA. We have established a preliminary protocol for transmitting this data, but have not yet demonstrated this in practice.

Here is the Arduino code we wrote for this component of the lab (each part will be explained)

int delayTime = 500; //ms;

int setupTime = 50; //ms;

String inputSignals[32] = {

"00000", "01000", "10000", "11000",

"00001", "01001","10001", "11001",

"00010", "01010", "10010", "11010",

"00011", "01011", "10011", "11011",

"00100", "01100", "10100", "11100",

"00101", "01101","10101", "11101",

"00110", "01110", "10110", "11110",

"00111", "01111", "10111", "11111",};

void generateClock(){

}

void setup() {

Serial.begin(9600);

pinMode(7,OUTPUT);

pinMode(6,OUTPUT);

pinMode(5,OUTPUT);

pinMode(4,OUTPUT);

pinMode(3,OUTPUT);

pinMode(2,OUTPUT);

}

void loop() {

for (int i = 0; i<32; i++){

String signalWord = inputSignals[i];

sendWord(signalWord);

delay(delayTime);

}

}

void sendWord(String signalWord){

digitalWrite(2, LOW);

delay(setupTime);

writeWord(signalWord);

delay(setupTime);

digitalWrite(2, HIGH);

}

void writeWord(String signalWord){

for (int i = 0; i<5; i++){

if (signalWord.charAt(i) == '0'){

digitalWrite(7-i, LOW);

}

else{

digitalWrite(7-i, HIGH);

}

}

}

As discussed, the maze coordinate are encoded in a 5 bit word. In the Arduino code, we hard coded all 20 maze coordinates that the robot could possibly reach. This can be seen when we instantiate the `inputSignals` array.

Since the FPGA reads this word on the rising edge of a clock, we had to create a 1-bit psuedo-clock signal in Arduino that is sychronized to change when we are ready to read the data. This is done in the `sendWord` method. Pin 2 corresponds to our "clock". We write a `LOW` value to the clock pin, and then wait `setupTime` amount of milliseconds before proceeding to actually write the coordinates to pins 7-3. We wait `setupTime` milliseconds before flipping the clock to a `HIGH` signal. Our thinking was that there is some propagation delay in the circuit. 50 milliseconds is plenty of time for the signal to set up, and for the FPGA to sample the correct value. If we didn't clock our signal, then we would run into issues sampling the signal when it is transitioning between values.

The `writeWord` method is relatively straightforwards. It simply iterates through the word string, and then writes the values to the appropriate pin.

The output pins corresponding to the coordinate data and clock (digital pins 7-2) were connected to a voltage divider array in order to be sent to the FPGA (to be discussed in next section).

TJ, Frannie, Michael

For our sub-team, the goal of this lab was to recieve packets of information from the Arduino, and then use this information to update the VGA monitor using the FPGA. In order to accomplish this, we first built off of our team's work from Lab 3.

Changing our grid size was a fairly simple process, but did require some troubleshooting. First we updated the line `reg[7:0] grid1[2:0] [2:0]` to `reg[7:0] grid1[3:0] [4:0]` in our main module. We then assigned a color to each register in grid1 to create a checkerboard pattern.

When we uploaded the code to the FPGA, the squares were too large to view the full grid. Thus, we changed the always block in our `GridSelector` module to the following:

always @ (*) begin

GRID_X = PIXEL_COORD_X / 96;

GRID_Y = PIXEL_COORD_Y / 96;<

end

Instead of bitshifting by 7 bits (dividing by 128 as before), we decided to divide by 96, since the height of the monitor is 480 pixels. 480 pixels / 5 squares = 96 pixels / square.

The squares were now all visible, but the grid would only display 4x4. After reviewing our code for a while, we realized we hadn't updated the registers `GRID_X` and `GRID_Y` to hold enough bits. We changed both of these to 4 bit registers and the grid was now 4x5!

Next, we needed to be able to update the grid to show the current location of the robot, as well as visited locations. To do this, we needed to rework the code from last lab, which had 4 different maps stored in memory. Rather than have each possible map stored in memory, we decided to update the map dynamically.

To accomplish this, we created the following state machine:

reg[7:0] grid1[3:0][4:0];

reg[7:0] currentGrid;

//state machine

always @(posedge CLOCK_25) begin

if (GRID_X > 3) begin //colors squares that aren't in the 4x5 grid black (unreachable)

PIXEL_COLOR <= black;

end

else begin

currentGrid <= grid1[GRID_X][GRID_Y];

if (currentGrid == 0) begin //if no input, color current square white (unvisited)

PIXEL_COLOR <= white;

end

if (currentGrid[0] == 1) begin //if LSB is 1, color current square pink (visited)

PIXEL_COLOR <= pink;

end

end

end

We then created a quick test to determine whether our implementation could receive inputs over time, update the grid and remember previous locations.

We created two registers to encode x and y locations, and used the one second timer to update x and y. Then the square in the grid at location (x,y) was assigned `8'b1` which corresponded to a visited square in our FSM.

reg[2:0] x;

reg[2:0] y;

if (led_counter == ONE_SEC) begin

led_state <= ~led_state;

led_counter <= 25'b0;

if (y==3'b100) begin // you're at the bottom of the grid

y<= 3'b0;

x<=x+3'b001;

end

else begin

y <= y + 3'b1;

end

grid1[x][y] <= 8'b1;

end

Here is a video of this incrementation:

With our 'state machine' working properly, it was easy to quickly assign different states to a grid tile and have a record of visited tiles. Our last step was to get the two halves of our assignment working together. We coordinated with the Arduino half of the team to make a protocol for robot position. In this case, we used the simplest possible communication scheme: A 5 bit array, with the first 2 bits representing x position, and the latter 3 representing y position.

Our FPGA was hooked up directly to the Arduino by a set of 6 wires (5 data bits, one valid bit). This setup took a frustrating amount of time, because the DE0 Nano datasheet's pinout diagram for GPIO-1 is unintuitive (to put it lightly). Once our pins were indeed hooked up correctly (this took a lot of oscilloscope debugging on our part), we worked on properly interpreting messages from the Arduino.

We used an independent module for the reading of data from the Arduino, called inputReader:

module inputReader(

valid,

arduinoInput,

robotX,

robotY,

preX,

preY);

input valid;

input [4:0] arduinoInput;

output reg [1:0] robotX;

output reg [2:0] robotY;

output reg [1:0] preX;

output reg [2:0] preY;

always @ (posedge valid) begin

preX = robotX;

preY = robotY;

robotX = arduinoInput[4:3];

robotY = arduinoInput[2:0];

end

endmodule

We initially struggled to get data to correctly update (our screen update schema was simple - put the robot on the tile the Arduino sent, record all past tiles sent as visited, and make the rest unvisited). We observed that in general, the tiles were flipping to visited in the order we expected, but not at a constant rate. Certain tiles would change colors two at a time. This signaled to us that the FPGA was not reading the data correctly. Because our inputReader module was reading data whenever the valid bit was high, the FPGA was capturing incorrect grid values that occurred when the output bits from the Arduino were flipping. We altered both the Arduino and the FPGA code, to capture Arduino data only on the pos edge of the valid bit, thereby preventing our error.

We were able to successfully communicate information wirelessly from one Arduino to another, then display it on a screen using the FPGA. See the below video:

The goal of this milestone was to implement line following and program our robot to drive in a figure eight.

We began by attaching the light sensors to the bottom of the robot. We used the line sensors provided, and began with three sensors. After seeing what other groups approached the problem with, we were uncertain of the viability of the three sensor design. However, having two sensors on either side of the line worked very well, because any time one went over the tape, we could immediately tell the robot was off course. In fact, we could likely remove the middle sensor and retain all functionality.

We had some issues with getting consistent readings from the line sensors, so our first solution was to put the line sensors closer to the ground. We did this by switching out the wheels. This made our line readings significantly more consistent. The value of the middle sensor (reading the black tape) was typically over 900, while the outer sensors read between 100 and 300 on the white board.

We ran into significant hardware problems as we continued working on our project. When the robot was programmed to go straight, it would continuously veer to the left. This behavior suggests an improper servo calibration, but setting both servos to 90 resulted in no movement (so the servos must have been properly calibrated). We puzzled over this problem for several hours, switching out several servos and adjusting our code to unevenly power one servo more than the other. Probing the PWM signals at the Arduino output pins revealed that they were behaving as expected. Re-doing the wiring ended up fixing the problem. Likely, one signal connection was loose and sent an odd PWM signal to the servo.

Our line detection algorithm was fairly simple. We observed that the sensors measured over 800 units on black tape and under 300 units (typically under 150) on the white board. We noted that if the robot moved off the tape in either direction, one of the outer sensors would be over black tape. We then could adjust wheel speeds and turn back onto the correct path. We could detect junctions when all three sensors were over the black tape.

Because we primarily tested later in the day, where light was lower, we set the black/white threshold at 400 units - this high margin ensured that we would not get any false positives - i.e., the robot would not shift unexpectedly.

Our line detection mechanism proved to be very robust. Whenever it was tested, it could accurately detect robot position relative to the line.

The primary challenge we faced was implementing the actual motion correction. We initially adjusted the speed of each servo by 1 to 2 units. We could not figure out why line correction was not working. The serial output told us that the robot was detecting position correctly, and that it was in fact changing the PWM signal. We hypothesized that it was in fact a servo issue - and while there was a servo hardware problem (see above) - this was not the primary issue. After several hours of testing, we finally pushed the correction servo power up significantly. One servo would move fowards at +45 (relative to 90) and the other would move at -45 (relative to 90). This gave us immediate and real results. We successfully implemented line correction!

if(line[2]>400){

rightSpeed = 45;

Serial.println("LEFT");

leftSpeed = 180 ;

}

else if(line[0]>400){

leftSpeed =135;

rightSpeed = 0;

Serial.println("RIGHT");

}

else if (line[0]<400 && line[2]<400){

Serial.println("STRAIGHT");

leftSpeed = 135;

rightSpeed = 45;

}

To get the robot to turn at a junction, we specified the conditions under which the robot should continue to turn. We set the servos' speed to turn once the sensors detect a junction. Most of our code directs the robot based on whether the sensors detect that the robot is at a junction, on the line, to the left of line, or to the right of the line, but turning must be address differently. For example, as the robot turns left, the sensors (which are all currently located at the front of the bot) will first report that the robot is on the line, then left of the line, then for a period of time it will not be able to detect the line at all, then right of the line, and finally back on the line.

Thus, we implemented code that broke from the primary loop that directs the robot based on position in reference to the line, allowing the robot to continue to turn after it turns away from the junction. Since the robot begins and ends a turn directly on the line, one of two boolean requirements must be satisified in order for the robot to continue to turn: it must either have not passed the line it originally began on, or the robot isn't straight on the line yet. This is roughly implemented as shown below:

boolean straight = (line[0]<400 && line[1]>400 && line[2]<400);

boolean passed = false;

if (leftWheel == leftTurn | leftWheel == rightTurn){

if {!straight | !passed) {

if (line[1]<400) {

passed = true;

}

break;

}

passed = false;

}

Here is the video of our robot doing a figure eight:

Materials used:

We constructed an analog circuit and wrote Arduino code that detects specific frequencies.

The first stage of implementation was creating an analog circuit that would feed time-domain voltage data to the Arduino Uno. The raw voltage output from the phototransistor upon receiving incident IR radiation has a significant DC component and a relatively small voltage swing. Our goal was to have the Arduino recieve a voltage input between 0 and 5 volts, with large voltage swing. Simply feeding the phototransistor voltage through a noninverting amplifier would amplify the DC component, and render the output signal unusable. Thus, we had to essentially create a high-pass filter at the op-amp input, and then add a DC bias signal to the op-amp output to ensure that the voltage stays within the 0-5v range. We achieved this by constructing the following circuit:

C1 and R2 function as a high-filter to remove the input DC. The 2.5V DC source before R2 is intended to DC-bias the output votlage so that the Arduino can properly read the signal. The theoretical gain of the circuit is roughly 5.5K (1 + R3/R4), though the demonstrated gain is much lower. Note that the actual circuit embedded on the robot will use a voltage divider to implement the 2.5V DC source, not an actual DC voltage source.

When developing the Arduino code to detect frequencies, we wanted to ensure that we had robust code that can detect variable frequencies. We also wanted to reduce false-positive and false-negatives.

The code we developed first generates an array containing the amplitudes of the time-domain signal at frequencies related to the bin index. Afterwards, we call 3 functions that check to see if the given array encodes a 7 kHz, 12 kHz, or 17 kHz signal. Each of the functions for each frequency first computes the "expected bin" where the highest ampitude should be observed. The theory is that if a signal is indeed a 7 kHz, 12 kHz, or 17 kHz signal, then the FFT should have a peak at those frequencies. However, given the imprecision of treasure frequency, combined with the nonlinearities of the circuit, there could be small discrepancies in where the max peak for a given periodic is observed versus where it should be. To address this issue, the function, `detectedFrequency` finds the maximum value within a predefined `peakIndexWidth` of the expected peak. From inspecting the graphs of different signals, we deduced that the maximum amplitude always falls within ~5 bins of where it is expected to be (so the default value for `peakIndexWidth` equals 5). The function iterates through all the FFT values in the array that are within `peakIndexWidth` from the expected max bin. It finds the maximum value contained in this window of bins. The function then checks to see if this maximum value exceeds an absolute threshold, in order to ensure that random noise signals don't produce false positives. If the maximum value fails to exceed an absolute threshold, the function returns `false`, indicating that input signal does not encode a specific frequency. Next, the function checks to see if there are no other bins which contain a value as high as the computed maximum value in the bin window. We don't count bins less than 30, since these correspond to strong DC signals, which are always contained in the input. If the function finds other bins that have values which exceed the value around the expected peak, then the function returns `false`, indicating that the signal is not a periodid signal with the specified frequency. If the input FFT array passes all these checks, then we determine that the signal is a periodic signal of the specified frequency and return `true`. A `true` return value prompts the program to print the frequency value that it has detected.

We have included the code for this program below:

/*

fft_adc_serial.pde

guest openmusiclabs.com 7.7.14

example sketch for testing the fft library.

it takes in data on ADC0 (Analog0) and processes them

with the fft. the data is sent out over the serial

port at 115.2kb.

*/

#define LOG_OUT 1 // use the log output function

#define FFT_N 256 // set to 256 point fft

#include "printf.h"

long clockFreq = 16E6;

int divisionFactor = 32;

int conversionTime = 13;

int numSamples = 256;

float samplingFrequency = ((clockFreq/((float)divisionFactor))/conversionTime);

float binWidth = samplingFrequency/numSamples;

#include // include the library

void setup() {

Serial.begin(115200); // use the serial port

TIMSK0 = 0; // turn off timer0 for lower jitter

ADCSRA = 0xe5; // set the adc to free running mode

ADMUX = 0x40; // use adc0

DIDR0 = 0x01; // turn off the digital input for adc0

pinMode(13, OUTPUT);

printf_begin();

}

boolean detectedFrequency(float freqToDetect, char fftArray[]){

int peakIndexWidth = 5;

int absoluteMinThreshold = 80;

int centralBinIndex = int ((float)freqToDetect)/((float)binWidth);

int maximumMag = -1;

for (int i = centralBinIndex-peakIndexWidth; i<= centralBinIndex+peakIndexWidth; i++){

if (abs((int)fftArray[i])>maximumMag){

maximumMag = abs((int)fftArray[i]);

}

}

if (maximumMag<(absoluteMinThreshold){

return false;

}

for (int i = 30; i

if (abs((int)fftArray[i])>= maximumMag){

return false;

}

}

for (int i = centralBinIndex+peakIndexWidth+1; i<256; i++){

if (abs((int)fftArray[i])>= maximumMag){

return false;

}

}

return true;

}

void loop() {

while(1) { // reduces jitter

cli(); // UDRE interrupt slows this way down on arduino1.0

for (int i = 0 ; i < 512 ; i += 2) { // save 256 samples

while(!(ADCSRA & 0x10)); // wait for adc to be ready

ADCSRA = 0xf5; // restart adc

byte m = ADCL; // fetch adc data

byte j = ADCH;

int k = (j << 8) | m; // form into an int

k -= 0x0200; // form into a signed int

k <<= 6; // form into a 16b signed int

fft_input[i] = k; // put real data into even bins

fft_input[i+1] = 0; // set odd bins to 0

}

fft_window(); // window the data for better frequency response

fft_reorder(); // reorder the data before doing the fft

fft_run(); // process the data in the fft

fft_mag_log(); // take the output of the fft

sei();

if (detectedFrequency(7E3, fft_log_out)){

Serial.print("7kHz \n");

}

if (detectedFrequency(12E3, fft_log_out)){

Serial.print("12kHz \n");

}

if (detectedFrequency(17E3, fft_log_out)){

Serial.print("17kHz \n");

}

}

}

There's a lot of boilerplate code from the FFT libtary. The function we implemented was `detectedFrequency`. We also added the `if` statements at the end of the `loop` method.

Here's a video of Evan demonstrating the functionality of the circuit and Arduino code:

Materials used:

For this milestone, we implemented distance sensing. We had two sensor options: long range and short range. We at first decided that we wanted to try to use a combination of both sensors, as the short range would detect walls up close and tell the robot to turn to avoid collision, and the long range would allow for faster mapping.

We tested the short range sensor first. We wired up a simple circuit consisting of the distance sensor and the Arduino and wrote simple code to display the values outputted from the distance sensor when the block of wood was located at different distances away. We recorded data for the short range sensor when the block of wood was located in 5 cm intervals from 5 cm to 35 cm away, within the range of the specifications of the sensor we found online. We found that the specifications for the short range sensor online were consistent with the data we recorded, a graph of which is shown below.

realized that the sensors were transmitting a signal in a wide cone and were therefore detecting objects around the room that were not relevant. This is the data we got from two subsequent trials for the long range sensors.

We decided that because the long range sensors were more unpredictable, and because we thought the short range sensors would be able to more accurately predict when the robot was approaching a wall, we would implement wall detection using short range sensors.

Our code for this is shown below:

if (distanceValue > 100) {

wall = true;

servoL.write(90);

servoR.write(90);

delay(500);

}

Here is a brief video of the robot with the integrated distance sensor. As it approaches the wall, it turns at the closest junction.

For this part of the lab, we wanted to prototype the maze navigation algorithm that would later be implemented on the robot. In Java, we implemented a Depth-First-Search (DFS) algorithm for navigating through the maze. We chose a DFS algorithm for a couple reasons. Firstly, we know that it is possible to traverse all reachable parts of the maze with a DFS algorithm. Also, we figured that a DFS, in comparison to a breadth-first search, would require the least amount of "back-tracking". Our simulation results confirmed that the DFS does an efficient job at traversing the maze.

At a very basic level, a DFS essentially entails traversing as far as possible down unexplored parts of the maze until a dead end is reached, and then backtracking to the previous junction and exploring more unexplored regions of the maze. The implementation required some mechanism for keeping track of unexplored areas, as well as previously-traveled nodes to which to travel should the robot reach a dead end or a node in which every adjacent and reachable node has been visited.

There are a couple of ways to implement this kind of algorithm. A recursive, tree-based approach can implement a DFS. The downside to this approach is that it is not an intuitive way of representing the maze, especially since the 2-d rectangular maze lends itself better to an array-based representation. The upside to this node is that it is quite easy to represent adjacent and reachable nodes, and the recursive structure of the maze lends itself well to a DFS algorithm.

Another way to implement a DFS is with a 2-d array to represent the maze, and additional data structures to keep track of unexplored areas and areas the robot has visited. The advantage of this system is a more intuitive way of representing the maze (which also integrates quite well with other aspects of the project). The downside is that we have to store these additional data structures, as well as implement them. In the end, we chose to implement an array-based DFS algorithm.

To keep track of previously visited nodes, we chose to utilize a Stack data structure. Recall that a stack allows for "pushing" items to the "top" of the stack, and then extracting elements in a last-in, first-out (LIFO) manner. This is precisely what we need for our algorithm, since we will need to return to the last visited node should the robot hit a dead end of simply not have any more unvisited nodes in its immediate surroundings. The ideal implementation of a stack allows for amortized constant time element extraction and appending. This is achieved in the Java implementation of a stack.

To keep track of unexplored regions of the maze, we utilize a Set data structure. Recall that a set stores unique elements in an unordered fashion, and allows the user to check for set containment, remove items from the set, and add items to the set. This is ideal for keeping track of unexplored regions of the maze, since we only need to store 1 copy of each unexplored region, the underlying indexing of the unexplored regiion within the set is unimportant, and we need to constantly check the set for containment, as well as perform removals and add elements. The ideal implementation of a stack allows for amortized constant execution of all these tasks (via a Hash function). This is achieved in the Java implemention.

To actually store the maze, we utitlized a two dimensional Java array. Java arrays—like arrays in most languages—allow for constant time element access, removal, and updating (via indexing). This makes it very efficient at representing the maze. Note that in a tree structure, we would not be able to access an arbitrary maze coordinate in constant time, since we would need to traverse through the tree. In addition to keeping track of regions of the maze that can be occupied by the robot, the array also had to keep track of all possible locations of walls. This meant that the size of the 2d array would not be 4x5 (corresponding to the dimensions of the maze), but 7x9, in order to account for possible locations of walls. Note that the odd index values correspond to possible locations of walls, with values at these locations corresponding to the presence or non-presence of a possible wall. Additionally, locations in the maze array for which both coordinates are odd do not represent actual robot locations or potential wall locations in the maze -- these are extraneous elements that will be ignored.

For the DFS simulation, we needed to somehow represent what the "actual" maze looked like. We did this by creating an actualMaze array of equal dimensions that encoded the actual maze that the robot would be traversing. In order to maintain the integrity of the simulation, the algorithm is only able to view adjacent elements (walls or nonwalls/open spaces) in this maze (with respect to the current position of the robot).

Here's a high-level representation of the DFS algorithm. Note that the implementation of these functions can be viewed in Github repository.

public class MazeNavigator {

String[][] actualMaze = new String[7][9];

String[][] maze = new String[7][9];

int[] currPos = new int[2];

HashSet frontierSet= new HashSet();

Deque visitedStack = new ArrayDeque();

JFrame frame = new JFrame();

MazeDrawer oldMaze;

public MazeNavigator() throws InterruptedException {

resetMaze();

initializeCurrentPosition();

frontierSet.add(Arrays.toString(currPos));

initializeActualMaze();

visitedStack.push(currPos);

createJFrame();

displayActualMaze();

while (!frontierSet.isEmpty()){

updateJFrame();

Thread.sleep(500);

maze[currPos[0]][currPos[1]] = "Explored";

removeCurrentPosFromFrontier();

ArrayList reachableCells = getReachableCellsAndAddWalls();

addUnvisitedSurroundingNodesToFrontier(reachableCells);

updateCurrPosAndVisitedSet(reachableCells);

if (!frontierSet.isEmpty()){

reachableCells = getReachableCellsAndAddWalls();

addUnvisitedSurroundingNodesToFrontier(reachableCells);

updateJFrame();

}

}

printDone();

}

The class begins by instantiating the two arrays that will be used to represent the maze as it actually is ( actualMaze), and the maze as the robot currently sees it ( maze ). We then create an int array to store the current position of the robot. Next, we instantiate the frontierSet and visitedStack to keep track of the frontier (unvisited) nodes and visited nodes, respectively. Two additional objects are instantiated to be used in the Java Gui. The graphics implementation will not be comprehensively discussed in this report, but the code can be viewed on Github.

Inside the instance method, we call the resetMaze() to reset the maze that the robot will populate with data as it travels through the maze. Next, the initializeCurrentPosition() is called, which assigns the initial location of the robot. The current position is added to frontierSet . Note that equality for arrays is determined not by the values at each cell, but by the object reference. Due to this, we cannot simply store arrays of current location in the HashSet, since we need the HashSet to recognize two distinct arrays with the same elements as equivalent. We didn't feel like creating a new object to store the current location, and overriding the equals method, so we instead just converted the array to String form. The String literals can be properly checked for equality, so that two arrays with the same element values—which Java by default views as distinct—would be viewed as equivalent when represented as Strings. We also need to initialize the array that represents the actual maze. Inside this method body, the user can define an arbitrary maze for the robot to navigate. We then add the current position to the visitedStack . Note that we don't need to convert to a String form since we never need to check for equality among members of the Stack (we just need to add to the top, and remove from the top of the stack). The displayActualMaze() method is called, which displays the current maze contents to the GUI.

The DFS is continually run so long as the robot knows that there are nodes that have been unvisited. This is what the while loop condition essentially entails. So while there are still unvisited nodes, we first update the GUI ( updateJFrame() ). Since we want to see the robot "traverse" the maze, we add a time delay of 500 milliseconds. We have chosen to store information about different maze locations as Strings, though this can be an Enum or another object. In Arduino, we will end up using Chars, as this is a lightweight data structure. We update the current location to be "Explored" to reflect the fact that the robot has visited this spot. Next, we remove the current position from the frontierSet . Next, we generate a list of cells that are reachable from the current location. The robot finds all walls that are immediately adjacent to it and writes wall values to its maze array, and if there's no wall in a possible wall location, adds the open cell on the other side of the nonexistent wall spot to an array of reachable cells to be returned. The code then adds unvisited surrounding nodes to the frontierSet . Afterwards, the program finds where it should move next and updates visitedStack , as needed. This method is at the crux of the DFS. Essentially, if it finds a reachable node in its immediate surroundings that hasn't been visited yet, it will move to this node. If all the reachable nodes have been visited (or if there aren't any reachable nodes), it pops from visitedStack and moves to this node. The final if statement only applies if the robot finishes traversing the maze (there are no more nodes in frontierSet ). If the robot has reached all the reachable nodes in the maze, then it takes note of the walls in its surroundings, and writes this to the maze array. Finally, the printDone method is called, which prints "DONE" to the GUI to signify that the maze traversal is complete.

There are important considerations to keep in mind as we implement this algorithm in Arduino. Most importantly, we have severe memory restrictions in Arduino. We won't have the luxury of using memory-intensive objects to store maze data. This means that instead of using Strings and integers, which take several bytes to encode, we will likely just use Char's, which are encoded in 1 byte. We will also have to deal with the fact that Arduino doesn't come with Stacks or Set data structures, so we will have to either import an external library that implements these objects, or create our own implementation. Another thing to keep in mind is how the robot will detect its surroundings. It won't simply check values in an array. Instead, it will only be able to tell if there's a wall right next to it. We will have to create a system for translating the presence of walls next to the robot into coordinates in the maze.

Moving out of the realm of simulation, our team needed to put in a lot of work to properly get our robot to navigate the maze. First, we had to install our new line sensors and reimplement line sensing and turning. Next, we had to install and calibrate our wall sensors. Finally, we had to integrate the entire system and implement a high-level state machine to control the robot and navigate the maze.

Anyone who has followed our group closely has certainly understood that we have had our fair share of struggles implementing line following. Our line sensors have always seemed a bit wonky, and our servos never seemed to move in a sensible fashion. We implemented fairly hacky, non-resilient solutions to the line following/turning milestones. We decided that a solid way to move forward would be to buy a 'better' line sensor online. We turned to the Pololu QTR-3RC Reflectance Sensor Array. This particular device presented a better alternative to our existing sensors in a variety of ways: First, it integrated three sensors in one board, perfectly positioned to follow the black tape; second, it used digital connections, reducing the burden on our analog pins; third, it had an existing Arduino library. We settled on final PID control values of KP = 0.1 and KD = 0.3

Because of the aforementioned library, integrating our existing line sensing code was extremely simple. We replaced our simple analog reads with the library's sensor read methods. We also used Pololu's calibration and PID control code to augment our line following. We continued using our funky servo calibration values for turning. It was a straightforward and successful process, that lead to a consistent line following and turning process.

This was mainly just a physical task. We basically dissassembled our robot fully in order to mount two more line sensors. We took this opportunity to clean up wiring and make our robot more visually appealing. We had a bit of an issue getting wall sensing to work properly, but it turns out that we just needed to redo our soldering. Check out this photo!

Since this was a complicated milestone, we spent a lot of time organizing our code, preparing for integration. Beginning this milestone, our code for each module (wall detection, line sensing, treasure detection, etc.) were all in separate files. We now have a structure for our final robot code. We created a high level finite state machine, which identifies which state the robot is in (between junctions, at a junction, just starting out). Each state corresponds to different behaviors and interfaces between different modules. Here is the basic state machine:

//JUNCTION state: detects walls and treasures, chooses next direction to move

if (state == JUNCTION) {

stop();

detectWalls();

if (!wallRight){

turnRight();

stop();

}

else if (!wallLeft) {

turnLeft();

stop();

}

else if (wallRight && wallMid && wallLeft) {

turnRight();

stop():

turnRight();

stop();

}

else {

turnRight();

stop();

}

state = BETWEEN;

}

//BETWEEN state: follows a line until it reaches the next junction

if (state == BETWEEN) {

Serial.println("BETWEEN");

position = qtrrc.readLine(sensors);

error = position - 1000;

junction();

if (isJunction) {

state == JUNCTION;

}

else {

goStraight();

}

}

//DONE state: robot has completed task

//TODO

}

Before we implemented maze following, we spent time unit testing and making sure that the robot could move and navigate and detect walls (essentially reimplementing milestones 1 and 2). This proved to be a much more difficult task then first expected.